Aaron Foyer

Director, Research

An introduction to the hottest topic in energy

Aaron Foyer

Director, Research

In the summer of 1958, a man named Jack Kilby working at Texas Instruments was enduring one of the worst droughts in Texas history. Stuck inside and with time to spare, the electrical engineer took to trying to solve an emerging problem in electronics: how to shrink the electronic circuit.

To amplify electrical signals at the time, circuits relied on transistors, bulky devices that resembled the alien tripods from War of the Worlds. A circuit with 1,000 transistors that could require 10,000 hand-soldered connections is not exactly scalable or cheap.

That September, Kilby showed off a prototype of his miniature electric circuit made of germanium, aluminum and gold, all on a single chip. Despite looking more like a high science lab gone wrong, it worked and might have been the most important invention of the 20th century. The National Academy of the Sciences attributed Kilby’s integrated circuit to launching Second Industrial Revolution.

A photograph of Jack Kilby’s Model of the First Working Integrated Circuit Ever Built circa 1958. (Photo by Fotosearch/Getty Images)

The importance of the invention took even Kilby by surprise. “What we didn’t realize (when we developed the integrated circuit) was that it would reduce the cost of electronic functions by a factor of a million to one,” said Kilby.

Fast forward to 2024: It’s safe to assume Kilby also didn’t anticipate his integrated circuit would eventually send the entire American power sector into a furor from warehouses containing millions of integrated circuits, but here we are. Data centers are, to borrow from the critically acclaimed movie, everything everywhere all at once.

If you’re worried that we’re too far into the data center boom now to ask questions like what exactly hyperscalers are and why servers use so much electricity, you’re not alone. Here’s an overview of data centers.

To start, data centers have been around for a while. The first were built during World War II, well before Jack Kilby’s integrated circuit. At the time, the US military used data centers to run the world’s first programable computer which it applied to calculate artillery fire and to help build the atomic bomb. Their development was supercharged by the Cold War and companies like IBM helped commercialize data centers for industry.

Enter AI: Skipping over several key chapters like the development of the microprocessor, the rise of the personal computer, the launch of the internet, the dot-com boom, Counter-Strike LAN parties, smartphones and cloud computing, we arrive in the early 2020s. OpenAI’s launch of ChatGPT, an artificial intelligence (AI) chatbot blew open the public’s imagination about the technology’s potential. Shortly thereafter, attention was drawn to the huge energy needs of the data centers needed to train and operate these programs.

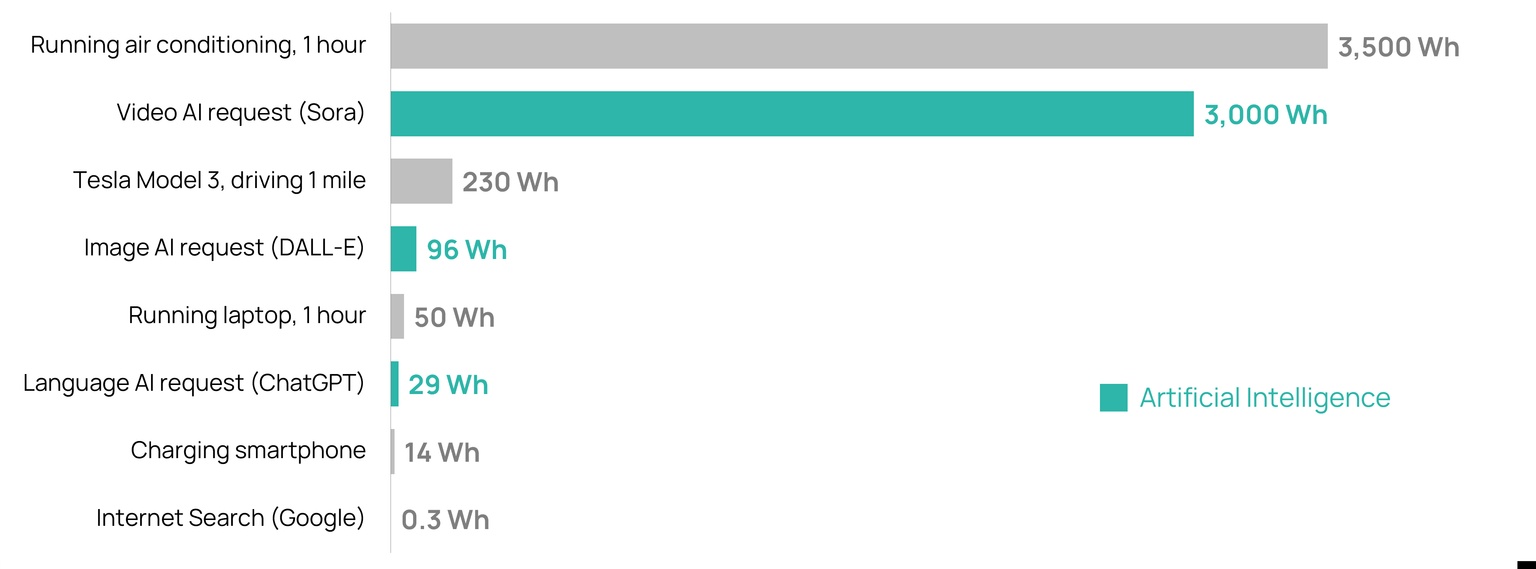

Source: Orennia, Blackstone, Tilburg.ai

Many users have already substituted Google for ChatGPT, which is many times more energy intensive. A single request on OpenAI’s chatbot requires 10x more power than a Google search. Asking an AI image creator like DALL-E to imagine what Harry Potter would have looked like in the 1970s uses energy more comparable to driving a Tesla than running an internet search. And using software like Sora to make a single AI video rivals running an air conditioner for an hour. It’s no surprise the heavy use of these technologies has people thinking about how they’ll satisfy the energy needs.

This may come as a shock to some GenZ readers, but there was a time not too long ago when most companies hosted their own servers in data spaces in their own buildings, on premises or “on prem”. As the world digitized and the use of the cloud grew, so did demand for larger spaces designed specifically for housing and networking servers. This eventually led to today’s modern data centers—large, 100,000-square-foot, shoe-box-shaped buildings that can house tens of thousands of servers. Backup infrastructure, data management and cooling systems in the buildings all ensure servers run efficiently and reliably.

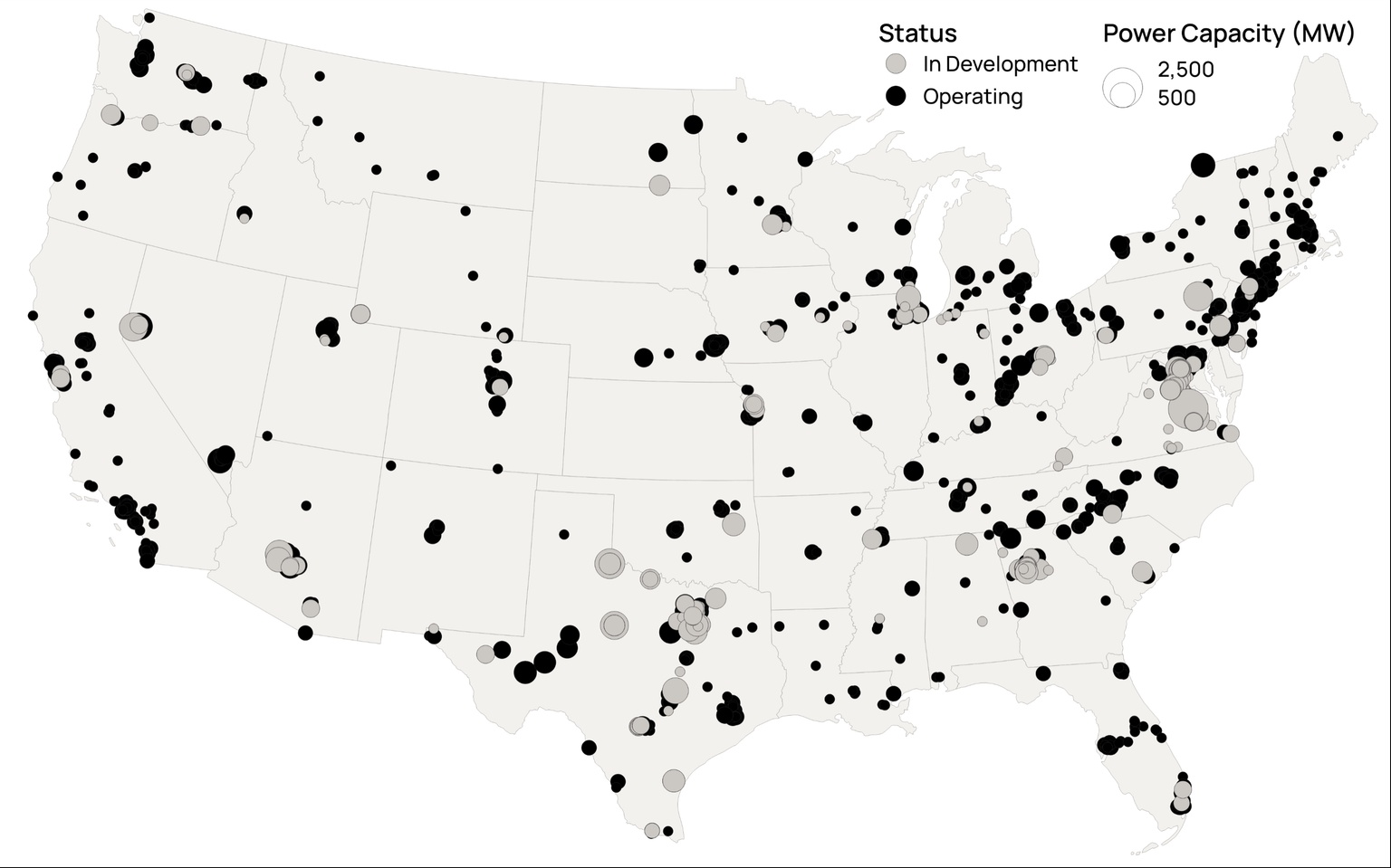

Source: Orennia

Scale also helps with cost: Instead of hundreds of companies, with hundreds of cooling systems and hundreds of IT professions, shared resources make data more affordable to own and operate.

An Apple iPhone user looking to store 900 pictures of their cockapoo on the cloud has very different data needs from a bank needing to securely protect the banking details of two hundred thousand clients. This creates natural segmentation in the industry.

The key segment types:

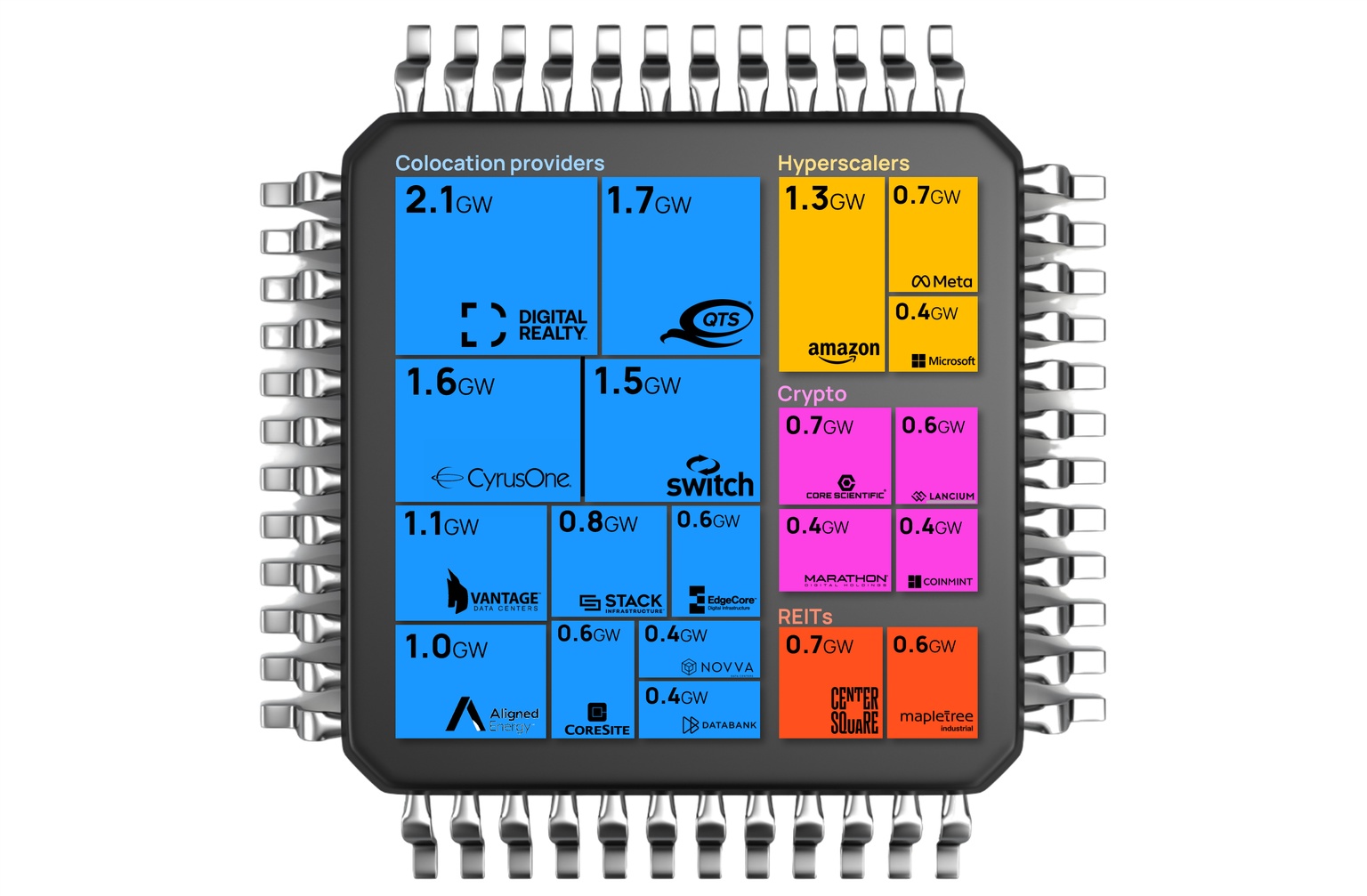

The various data center companies operate in different segments. In aggregate, the companies with the largest total data center capacities in the US are colocation providers, according to our data.

Source: Orennia

With different user types come different needs and preferences. Some data customers are more price sensitive but lenient on things like reliability and downtime. On the flip side, others will pay hefty premiums to avoid downtime altogether. This introduces the concept of the four tiers.

Data center tiers:

Local retailers or small consultancies might be just fine with the small downtimes that come with the lower costs of a Tier 1 data center, but major banks and large e-commerce websites like Amazon require the reliability of Tier 4.

What has really transformed the power sector is how much electricity data centers currently use superimposed on their expected growth. According to the International Energy Agency, data centers accounted for 1% to 1.3% of global electricity demand in 2022, that’s between 230 and 340 terawatt hours. Those numbers don’t include crypto mining, which accounts for another 0.4% of global power demand.

Sources: Orennia, the International Energy Agency

The surge in applications of AI is driving the need for more data centers, which is expected to contribute meaningfully to load growth over the rest of the decade. Goldman Sachs estimates global power demand from data centers will increase 160% by 2030 and will be the single largest driver of new electricity demand over the period, beating out electric vehicles and home electrification.

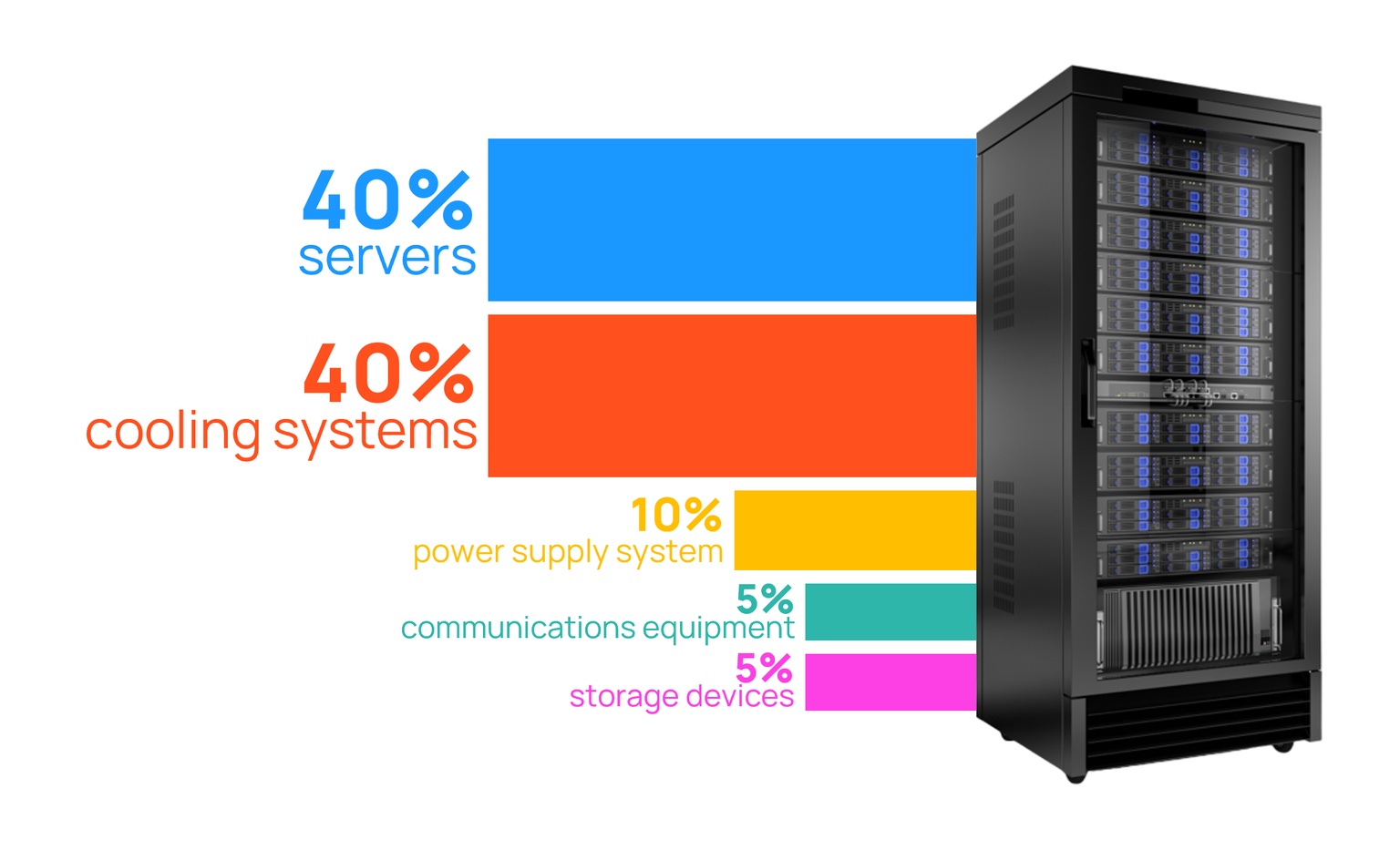

What the power is used for: First and foremost, for the basic function of the microchip. Electricity is used to switch on and off the millions of transistors on a chip to process information and then transmit signals, this accounts for 40% of data center energy use. Cooling plays an equal roll. Servers and IT equipment generate heat as they operate, which degrades their performance. Air and liquid cooling systems improve equipment function by removing waste heat, a process that accounts for another 40% of energy consumption. The remaining 20% is balance of plant—power supply, equipment and storage.

Billion-dollar problems often spur billion-dollar solutions. The idea of access to power being the limiting factor in data center growth paired with challenges in siting new facilities have prompted companies to make chips more efficient. Earlier this year, chipmaker Nvidia announced that its next-generation Blackwell graphics processing unit (GPU) used for AI workloads had an energy efficiency 25 times better than its previous model. While markedly better, it’s unlikely to tame load growth.

Why not: With access to more efficient chips, developers will almost certainly elect to improve data center performance over making a smaller facility that uses less power. This would be in keeping with history.

Since the debut of Jack Kilby’s integrated circuit in the mid-1950s, the energy efficiency of chips has improved by ~15 billion times. Improved efficiencies did not reduce the total system energy use of computing, but instead created opportunity for new use cases and industries to emerge. Improved GPU chips are more likely to lower costs, making it more affordable for industries where computation is currently too expensive.

The future of AI and data centers is not set in stone. There has been a growing chorus of views that the pace of investment into AI is unsustainable. In late June, Goldman Sachs published a white paper that went viral highlighting how leading tech giants look geared to spend $1 trillion on AI capex, but spending to date has little economic benefit to show for it.

On the other hand, AI also shows flashes of incredible potential. In 2021, the AI program AlphaFold led by Google accurately predicted protein structures, something that has challenged biologists for 50 years and paves the way for drug discovery and better understanding diseases. And earlier this year, researchers at Princeton used AI to solve plasma instability in nuclear fusion reactors, a key hurdle to overcome on the road to their commercialization.

Data-driven insights delivered to your inbox.